Introduction to AI Governance

- lidiavelkova

- Jan 23

- 2 min read

What is Responsible AI

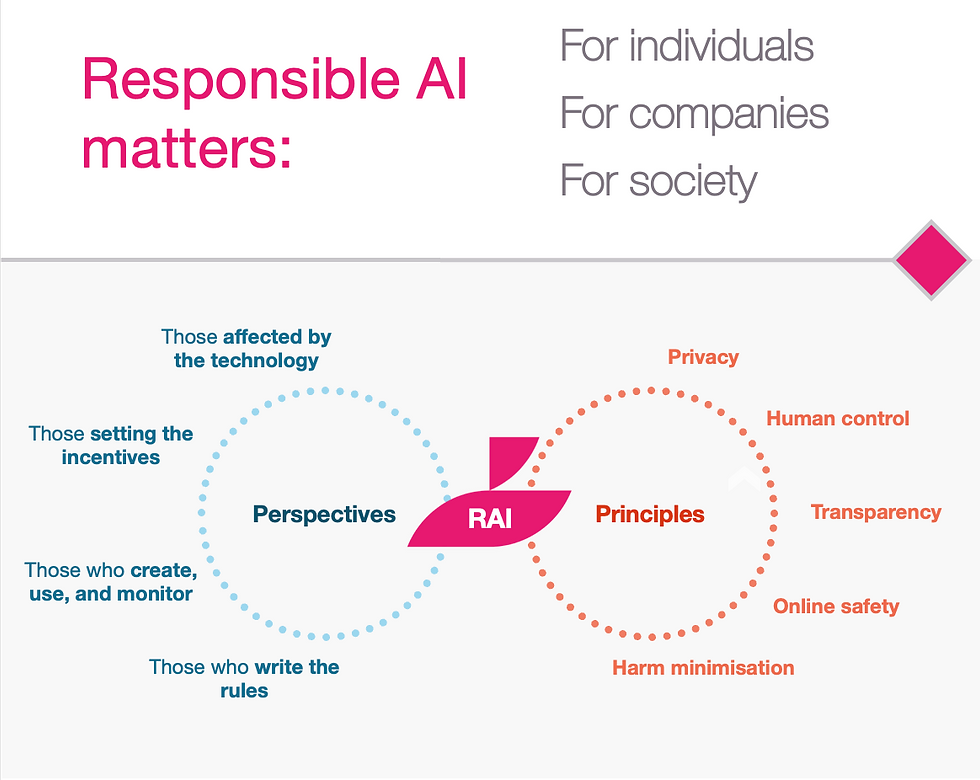

Responsible AI (RAI) involves the consistent application of principles and rules throughout the AI lifecycle to prevent harm, uphold human rights, and prioritize human-centric outcomes, balancing competitive pressures with societal interests.

Responsible AI needs to engage:

Those affected by the technology: Citizen groups, consumers, and individual, corporate, and institutional users

Those setting the incentives: Investors, procurers, funding bodies, and civil society

Those who create, use, and monitor it: Tech companies, start-ups, researchers, ethicists, and monitoring bodies

Those who write the rules: Policymakers, regulators, international organisations, and self-regulatory associations

Responsible AI aims to ensure:

Privacy & IP: Placing the right to privacy and IP rights at the heart of principle-creation and rule-making

Human in control: Practising effective human oversight over each relevant process and giving people control over AI systems

Transparency: Focusing on clarity, truthfulness, and explainability of decisions and outputs

Safety: Keeping people and their information safe and secure to foster trust

Harm minimisation: Being vigilant, responsible, and accountable for the uses of AI

Why adopt a responsible approach to AI?

Avoid legal repercussions and hefty fines

Laws around the world are quickly developing. Existing laws in the EU, for example, such as the GDPR and the DSA, and incoming instruments such as the EU AI Act raise the bar continuously with a view of a fully regulated future.

Responsibilities for developers and deployers of AI are already in place in jurisdictions such as the EU, the UK, various US States, and China.

Safeguard your reputation

Sensitive data leaks, copyright violations, bias and discrimination, liability for chatbot hallucinations, and exposing trade secrets are just some examples of risks in this space.

Organisations across geographies are facing intensifying whistleblower, NGO, and customer backlash over AI misuse, failure to disclose their reliance on Gen-AI, and violation of fundamental rights.

(Re-)build trust with your people

A whopping 55% of contributions in our research expressed they feel tracked online at best and spied on at worst, with AI’s role in these processes raising additional concerns

Our studies have further shown that considering diverse perspectives when strategising about AI development and use is essential to (re-)building trust with stakeholders, partners, peers, and consumers.

Be on the right side of history

When organisations fail to put in place robust policies, strong principles, and effectively operationalised processes, they also miss out on the true benefits of responsible growth.

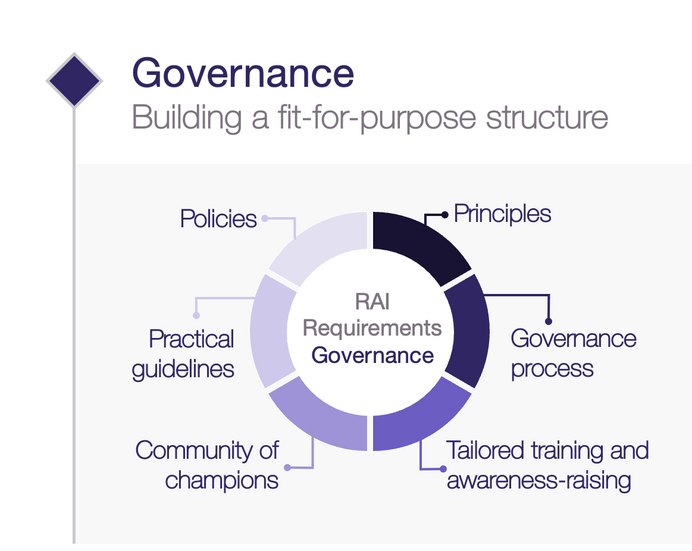

What does AI governance require

Scroll right to discover the elements of effective AI governance:

Your road to RAI maturity starts here and now!

Let’s work together to:

Comments